Surface Quality Detection Techniques

Background

The goal of this project is to propose an approach for edge and surface quality detection in the postproduction phase using readily available and familiar devices

My role involved performing image acquisition and processing before inputting the images into a vision transformer.

Technical Details

The overall framework of the proposed approach is shown below

DOE and machining parameters

There are three factors and three levels as shown in table 1. The design of experiment is done using a full factorial design.

The three factors taken into consideration are resolution of the image capturing device, illumination, and cutting speed. The surface roughness of the parts is measured using a Mitutoyo SJ-410 profilometer.

Table 1: Factors and Levels for the DOE

Microscope Data

-

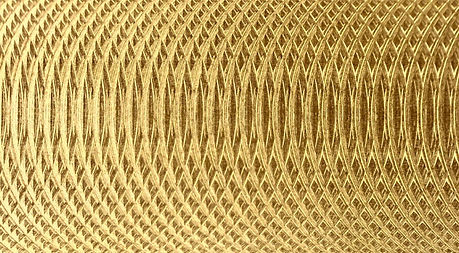

The CNC-machined brass parts were classified into three main categories depending on their machined cutting speed (low, medium, and coarse) and the cutting tool size (1/2in, 1/4in, 3/8in). To collect a large amount of data for brass surfaces, a microscope was set up to capture images under different lighting conditions (ambient lighting, ring lighting, and angled lighting). Each brass part was divided into many passes corresponding to a cutting speed and multiple images were captured for every pass. The figure below illustrates an example among 27 combinations that I performed for this project.

-

After gathering the images of all the combinations, the data was fed into a Python code that conducted patch extraction. This code generated multiple patches from each image, all with standardized dimensions, and then stored them in their corresponding folders. Following that, the images within the folders were labeled based on their respective surface roughness using a vision transformer.

An example of a brass part with four machining passes, from which multiple images are taken.

Below are some examples of brass surfaces under the microscope, showing variations in texture and finish for different tool sizes and cutting speeds.

Outcomes/lesson learned

-

Conducted a full-factorial DOE, considering multiple critical parameters for reliable data collection.

-

Gained foundational knowledge of machine learning and Vision Transformers for post-production quality detection.

-

Learned principles of CNC machining, including the impact of cutting speeds and tool sizes on part quality.

-

Systematically organized images into properly labeled folders to maintain consistency and prevent errors.

-

Performed patch extraction in Python and saved outputs into structured post-processing folders.

-

Identified angled lighting as the optimal setup, producing clear and uniform image colors.

-

Applied additional image processing to ambient-light captures to enhance brightness.

-

Ensured accuracy in machine learning outcomes by maintaining strict attention to detail and organization during image acquisition.